10G IPERF testing

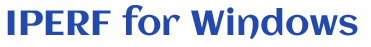

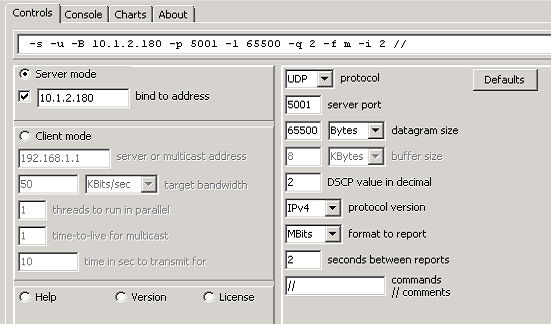

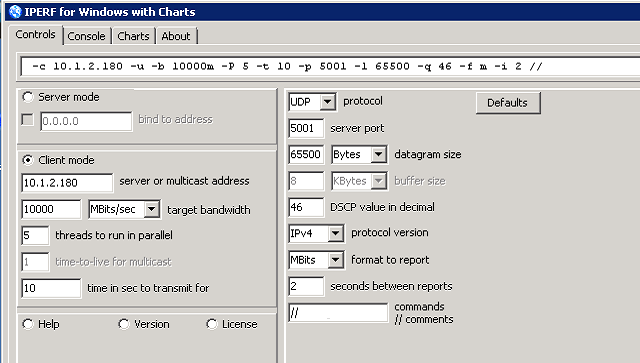

One of our clients shared his experience in testing 10G back-to-back link between two servers. The first three charts are for the iperf Client. Note that UDP datagram size is set to the maximum 65500 bytes. It was found that setting it to 64kBytes somehow affects Windows Task Manager - it doesn't show the traffic.

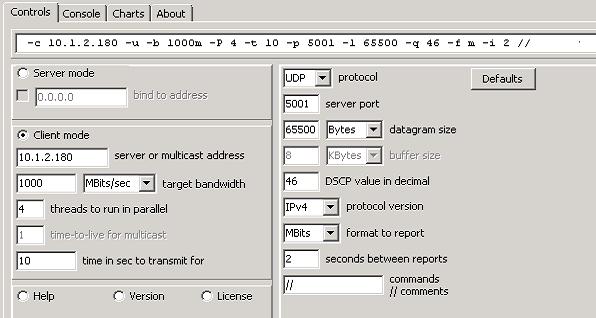

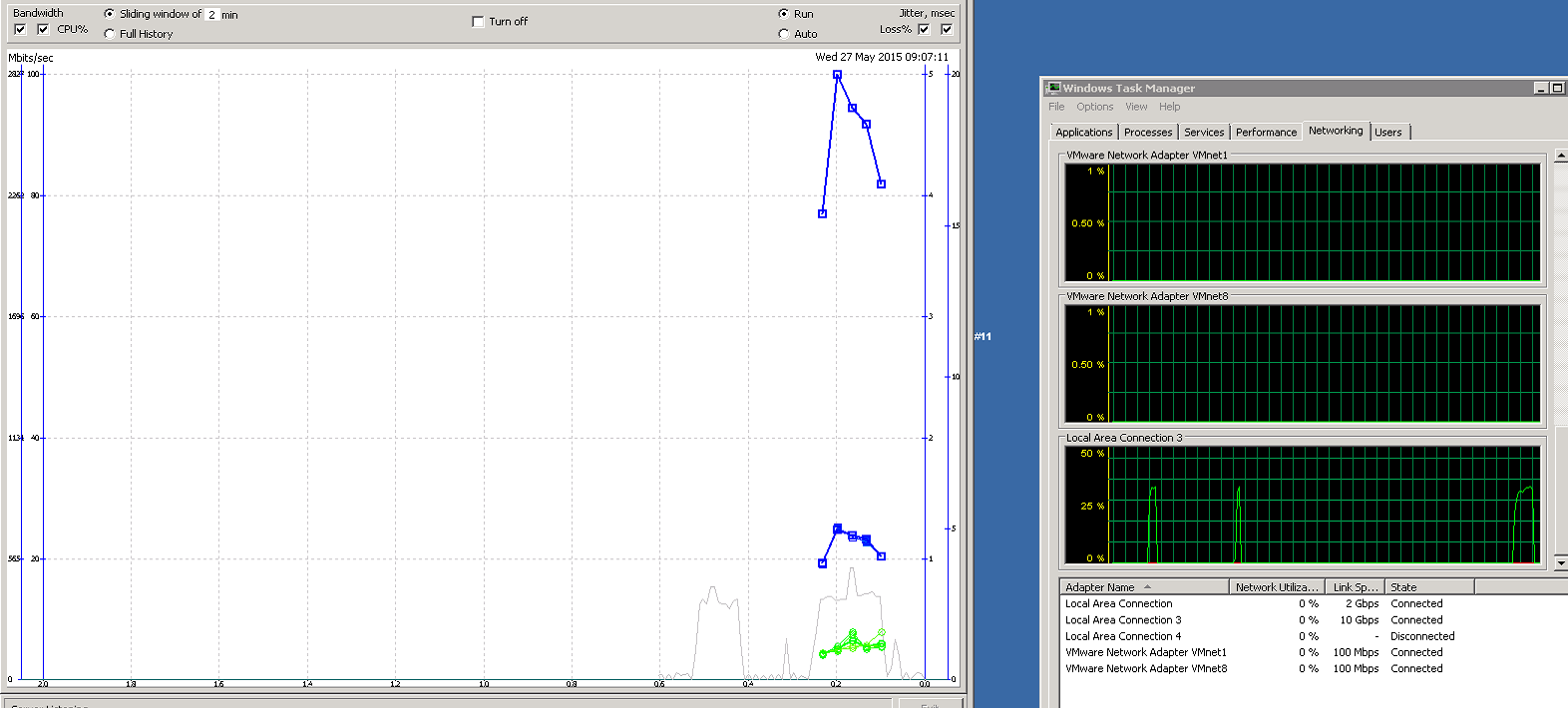

Four threads together pushed around 3Gbits/sec of traffic:

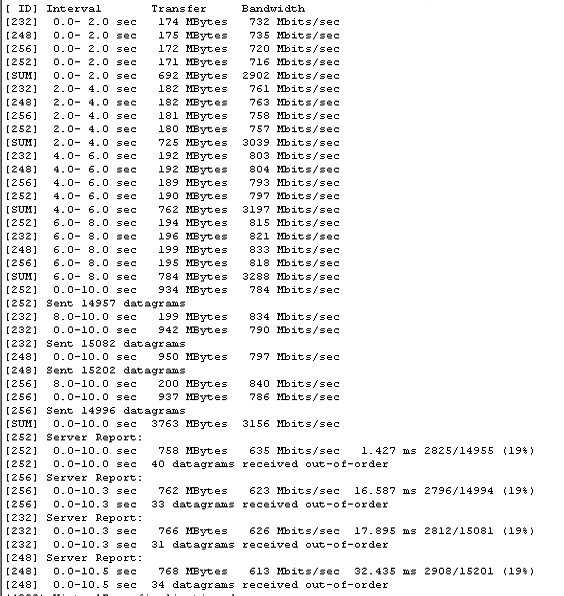

Charts per thread and total of 4 threads match Task Manager. CPU goes around 70% on Clinet:

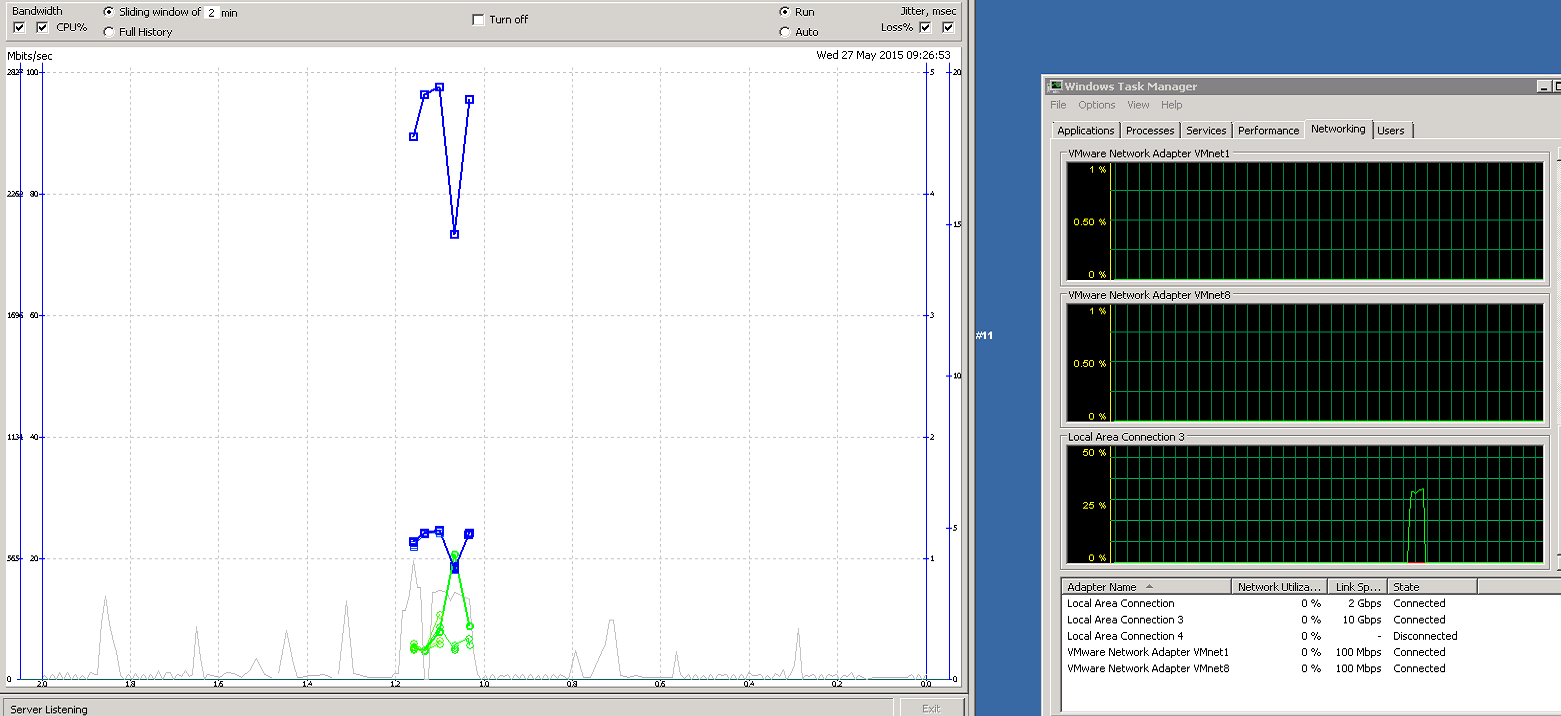

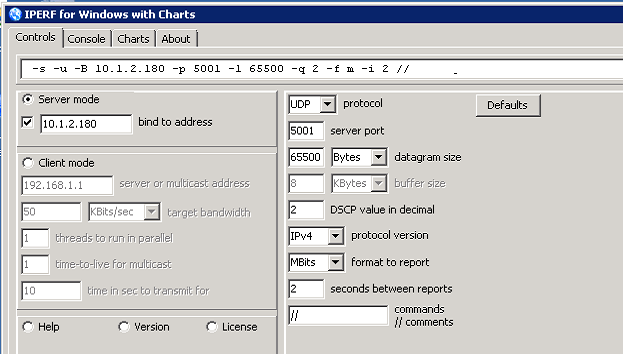

This is the Server side:

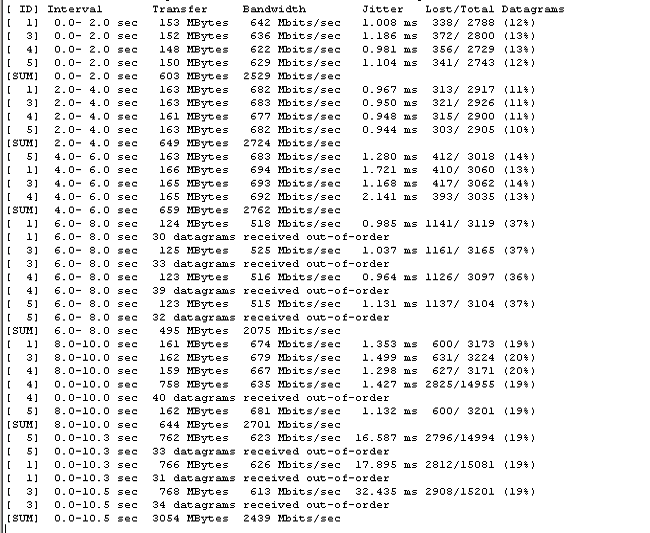

Packet loss is quite significant:

Server CPU is less loaded:

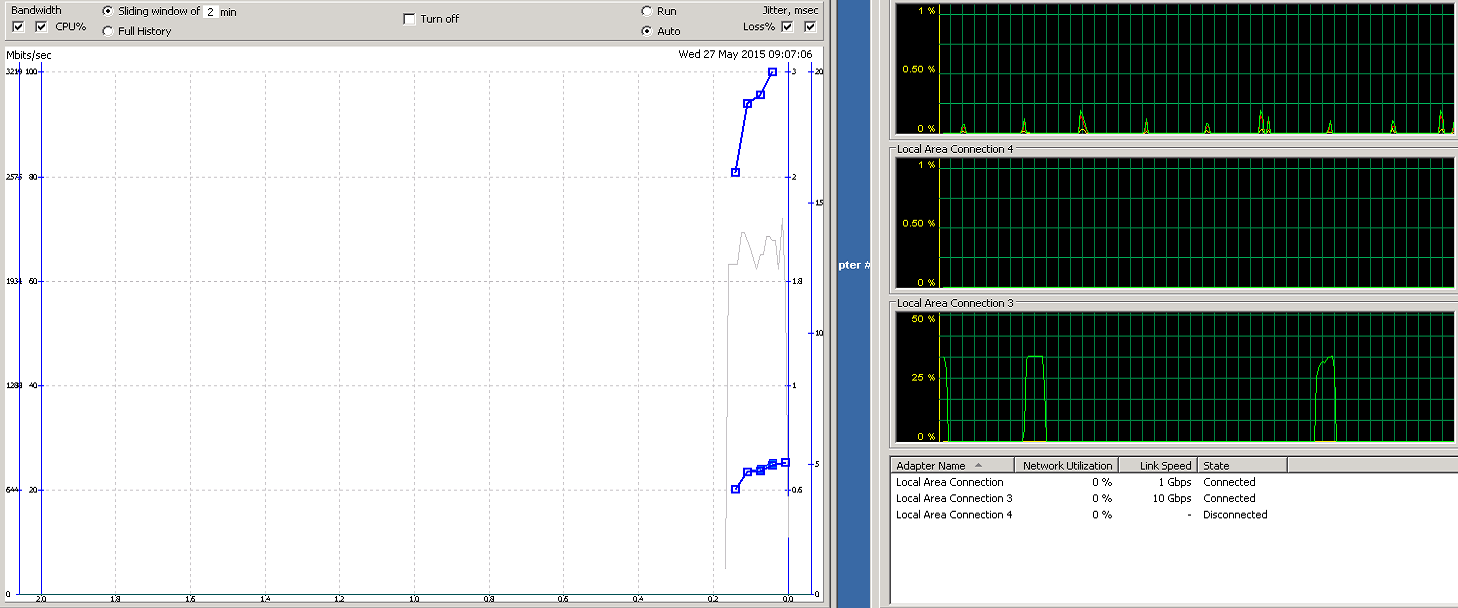

Another set of tests on Client:

..and Server, with almost the same results:

Minor tune up of parameters didn't help much, on Client:

..and Server:

When he launched the second pair of iperf Client - Server, total aggregated bandwidth was still around 3 Gbits/sec. Which points out to the possible problem with the 10G network cards. The bottleneck was proven to be not on Iperf side.